Category: Blog

If you’ve worked in QA or software development, you know the struggle: test debt. Scripts that break with every UI change. Endless hours spent maintaining automation instead of advancing coverage. Fragile frameworks that drain time and resources. For years, this has been the hidden tax on software quality—slowing teams down and preventing them from delivering

A recent email from ASTQB warned testers that to survive in an AI-driven world, they’ll need “broad testing knowledge, not just basic skills.” The advice isn’t wrong—but it misses the bigger picture. The real disruption is already here, and it’s moving faster than most realize. AI systems like AI Script Generation (AISG) and GENI are already generating, executing, and

A recent CIO article revealed a startling reality: 31% of employees admit to sabotaging their company’s generative AI strategy. That’s nearly one in three workers actively slowing down, blocking, or undermining progress. Now layer in the math: most AI initiatives involve dozens of employees. That means statistically, almost every project or proof-of-concept is being impacted by one or

For decades, software testing has been built on a simple idea: humans write tests, machines run them. That model has persisted since the first commercial recorders appeared in the mid-1990s. Testers would record a flow, edit a script, maintain it as the application evolved, and repeat the cycle endlessly. Tools improved incrementally, but the basic

For decades, software quality assurance has been a human‑driven task. Teams write test cases, automate scripts, execute manually or with tools, and then maintain those tests across releases. This work is detail‑oriented, repetitive, and long resisted full automation. In the United States alone, there are roughly 205,000 software QA analysts and testers, according to the Bureau

MIT just issued a wake-up call: despite $30–40 billion poured into generative AI, 95% of corporate AI pilots are failing to deliver financial returns. Enterprises are stuck in proof-of-concept purgatory while startups are racing ahead, scaling AI-native businesses from day one. Peter Diamandis put it bluntly: bureaucracy is the trap. Large organizations are trying to

When artificial intelligence enters the conversation around software testing, a common fear surfaces: Will AI take my job? For QA professionals, who have long been on the frontlines of quality, the rise of AI-driven platforms can feel both exciting and intimidating. The truth is this: AI won’t replace your QA team—it will empower them. Far

Nothing undermines user trust faster than a bug discovered in production. A single glitch—whether it’s a broken checkout button, a failed login, or a data error—can send customers straight to competitors, damage brand reputation, and even spark financial loss. In today’s hyper-competitive digital economy, companies can’t afford to let users be their testers. That’s why

For decades, software teams have relied on traditional test automation frameworks like Selenium to reduce manual effort and improve application quality. While these tools helped advance testing practices, they still depend heavily on human-written scripts, ongoing maintenance, and limited scalability. Enter AI-First Testing. Platforms like Appvance IQ (AIQ) are rewriting the rules by using generative

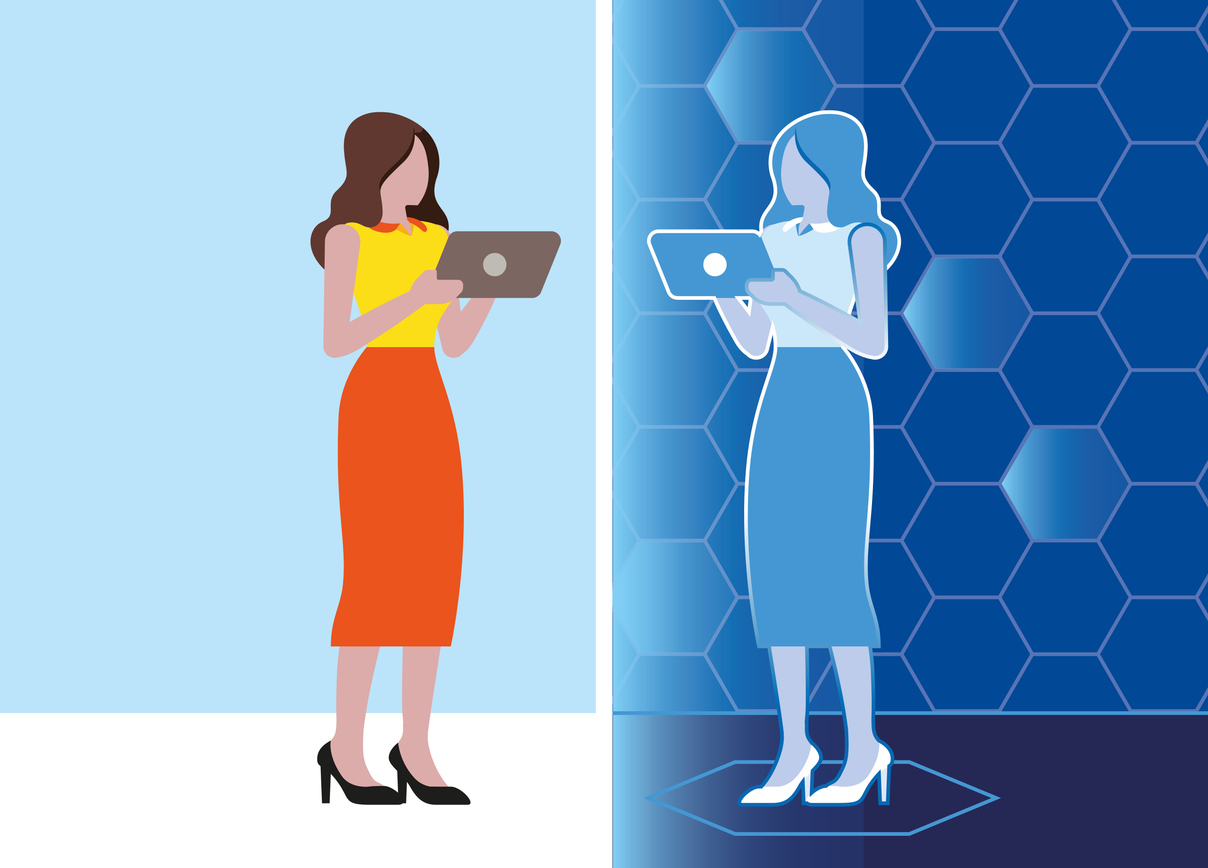

In today’s hyper-accelerated release cycles, speed and quality often feel like opposing forces. Traditional testing approaches—manual scripts, record-and-playback tools, or even semi-automated frameworks—simply can’t keep up. They’re slow to create, expensive to maintain, and shallow in coverage. Enter Digital Twin technology, the engine behind Appvance IQ’s (AIQ) ability to deliver 100X faster script generation and