Author: Kevin Surace

In a startling move that’s rippled through the tech world, IgniteTech CEO Eric Vaughan replaced nearly 80% of his workforce after employees resisted his AI-first strategy—a change he says he’d make again. An Existential Shift in Culture, Not Just Tools Vaughan believed generative AI wasn’t optional—it was existential. He introduced “AI Mondays,” mandated that every department—from

In the fast-moving world of software delivery, speed and accuracy are everything. Time isn’t just money—it’s market share, competitive advantage, and customer loyalty. Every defect that slips into production is a risk: to your brand, your bottom line, and the trust you’ve built with your users both internal and external. Yet, despite this reality, many

By Kevin Surace, CEO of Appvance Every few months, headlines trumpet the latest “AI breakthrough.” A new co-pilot. A smarter recorder. An incremental feature that saves a few hours here or there. And every time, CIOs and CTOs ask the same question: is this worth the disruption of implementing new systems? Peter Diamandis put it

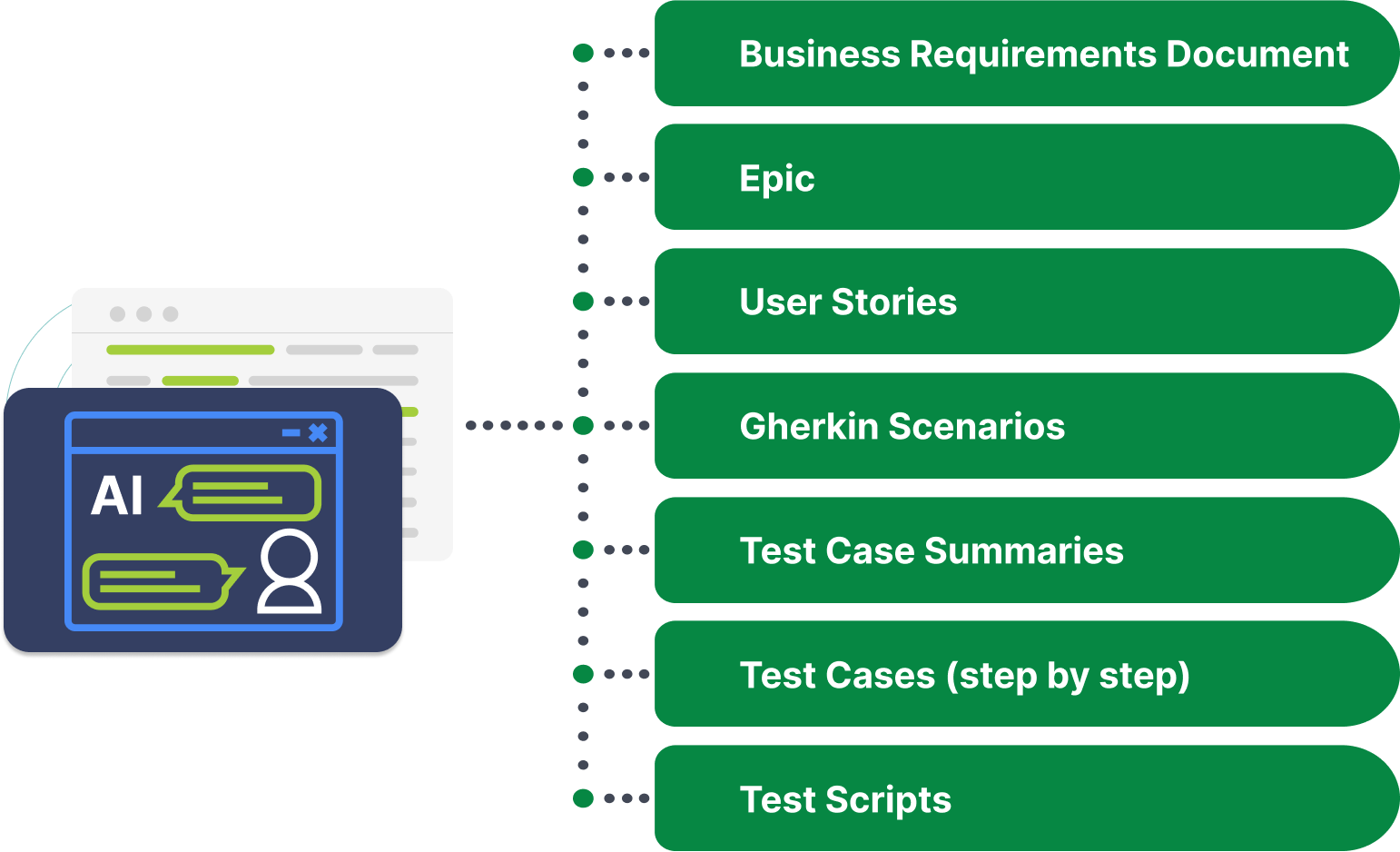

A recent email from ASTQB warned testers that to survive in an AI-driven world, they’ll need “broad testing knowledge, not just basic skills.” The advice isn’t wrong—but it misses the bigger picture. The real disruption is already here, and it’s moving faster than most realize. AI systems like AI Script Generation (AISG) and GENI are already generating, executing, and

A recent CIO article revealed a startling reality: 31% of employees admit to sabotaging their company’s generative AI strategy. That’s nearly one in three workers actively slowing down, blocking, or undermining progress. Now layer in the math: most AI initiatives involve dozens of employees. That means statistically, almost every project or proof-of-concept is being impacted by one or

For decades, software testing has been built on a simple idea: humans write tests, machines run them. That model has persisted since the first commercial recorders appeared in the mid-1990s. Testers would record a flow, edit a script, maintain it as the application evolved, and repeat the cycle endlessly. Tools improved incrementally, but the basic

For decades, software quality assurance has been a human‑driven task. Teams write test cases, automate scripts, execute manually or with tools, and then maintain those tests across releases. This work is detail‑oriented, repetitive, and long resisted full automation. In the United States alone, there are roughly 205,000 software QA analysts and testers, according to the Bureau

MIT just issued a wake-up call: despite $30–40 billion poured into generative AI, 95% of corporate AI pilots are failing to deliver financial returns. Enterprises are stuck in proof-of-concept purgatory while startups are racing ahead, scaling AI-native businesses from day one. Peter Diamandis put it bluntly: bureaucracy is the trap. Large organizations are trying to

In a marketplace flooded with “AI-washed” claims, distinguishing real generative AI from superficial automation is more critical than ever—especially in the high-stakes realm of end-to-end software testing. For organizations evaluating AI-powered testing platforms, asking the right questions can uncover massive differences in capability, scale, and ROI. At Appvance, we’ve engaged with hundreds of QA and

The rise of “generative AI” in software testing has sparked excitement across the industry—but it’s also led to widespread misconceptions. One of the most persistent myths? That the mere presence of generative AI means faster testing and higher productivity. In reality, some so-called generative AI implementations actually slow you down. A prime example: AI-driven assistants that let you type