Would you like to know in minutes if a stack update changed the functionality or performance of a production application that has no test support?

Read on…

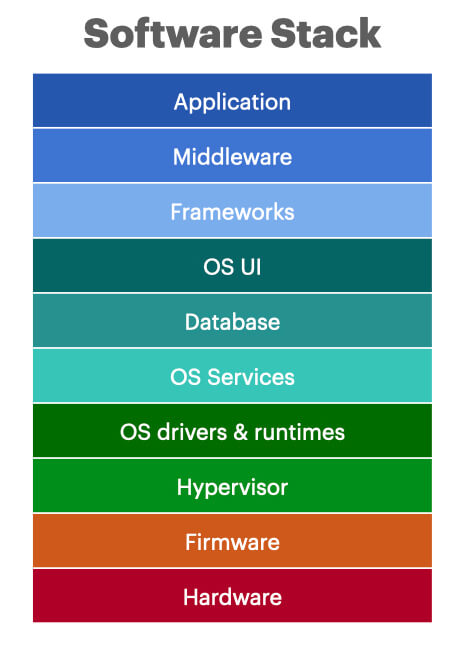

Ops teams have an obligation to keep all applications in production running. Functional, performance and security in place. However, they also have an obligation to update layers in the stack for updates and security patches. A large enterprise today may be responsible for thousands of applications which run their business. However, over time there may be no dev or QA team assigned to be sure they are working correctly after stack updates. Even the ops team themselves won’t know if they continue to function and perform unless a user calls them and reports a problem after a stack upgrade.

AIQ for OPS closes this gap by applying AI to autonomously learn how an application works today in production and compares that to results every time any change occurs. This differs from synthetic APM, where engineers write specific use cases which run periodically. With AIQ for OPS, no test cases need be written by humans.

The AI system writes them itself and maintains a database of use cases, without human involvement, to test and compare runs immediately flagging changes for web and native mobile applications.

ENTERPRISE CHALLENGE:

- 100’s of applications not regularly maintained or tested. No automated tests exist.

- We must update stack components to latest versions for security and compatibility

- Applications break on updates and we have to revert

SOLUTION:

- Use AIQ’s AI based autonomous test creation to auto-generate 100’s of scripts with validations against current stack – no QA or dev required.

- Re-run those same scripts with any stack updates automatically.

- AIQ will flag any differences in application actions or outcomes in minutes.

Learn more by requesting a demo at www.appvance.ai/get-demo