This post is the second in a 2-part series.

As we discussed in my prior post, the Autonomous Software Testing Manifesto, AI provides you, the Automation Engineer, the power to test broadly and deeply across your application. This furnishes you with the opportunity to reevaluate your test strategy and determine what will be most effective in finding bugs. So now with AI in the picture, how we plan for and execute against the testing requirements changes significantly.

Human Written tests (ML-backed)

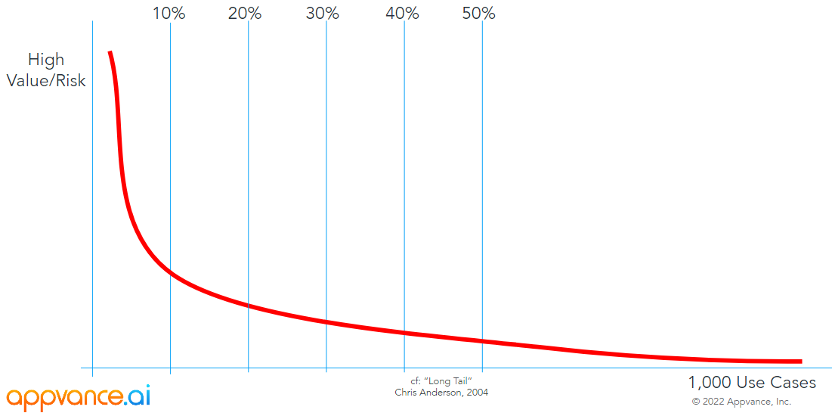

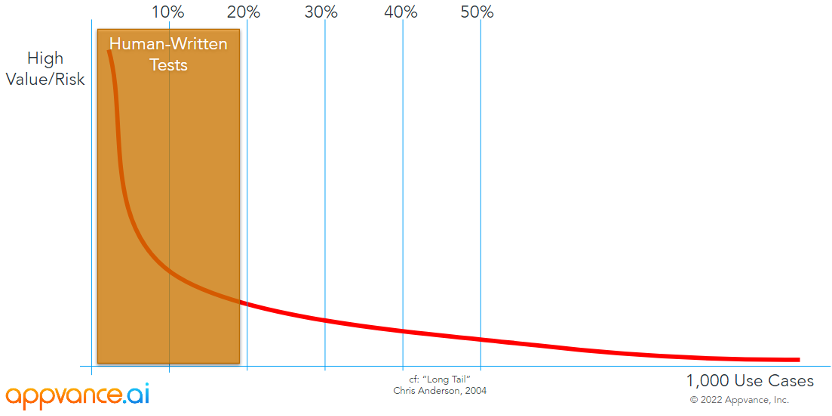

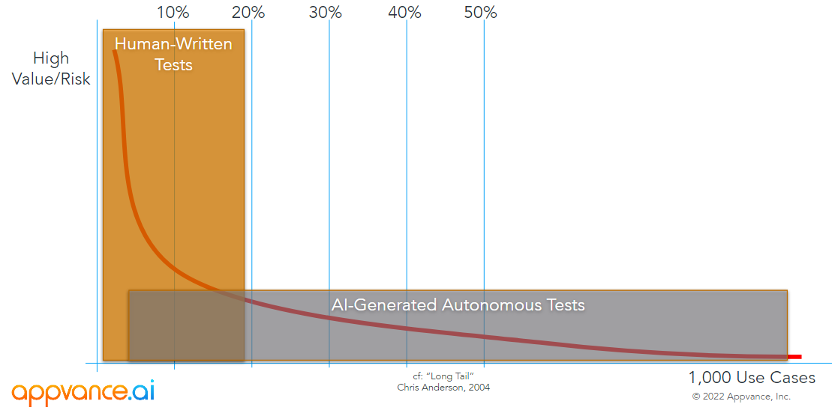

The first decision we need to make is which tests need to be written by human testers and which will be autonomously generated by the AI. Typically, following Pareto’s Principle, the “vital few” that need to be explicitly written will represent around 20% of the total test cases (this will vary by application), and these test cases are those that represent the high-risk and/or high-value capabilities of your application. The human-written test cases will invariably have a sponsor (compliance, audit, finance, HR, legal) who requires empirical proof that the behavior of the application follows the prescribed plan and the results are always as expected.

If you’re using an integrated testing platform for your test development, like AIQ, you’ll have AI assisting as you write your tests. The AI is going to use Machine Learning to choose the best accessors (locators) for you, and it will identify “fallback” accessors in case things change, reducing maintenance and false negatives. Even more importantly, it will self-heal your scripts in case your user interface changes.

The outcome of taking this approach to determining which tests should be written by your team is that the number of use cases that need to be explicitly defined is far fewer. This reduces the effort of your requirement engineers and your automation engineers.

Autonomous tests (AI Blueprinting)

The work that the AI is going to do now needs to be codified. The domain knowledge that must be transferred to the AI during the training phase can be organized into three categories:

- When the AI sees a possible action, what should it do; for example, when the AI sees a particular Popup Box, it should click “like” 33% of the time, click “unlike” 33% of the time, and do nothing the remaining 34% of the time

- When the AI sees dependencies, what should it test; for example, when the AI sees a Quantity, a Price, and a Cost, it should validate that Quantity x Price = Cost

- When form fields are encountered, what data should it enter; for example, when the AI sees Date of Birth, it should enter a date that is before today and not more than 120 years ago

We call these the “see this: do this”, “see this: test this”, and “see this: enter this” rules.

This process, which we call AI Blueprinting, is an iterative interaction where the human focuses, targets, and trains the AI to drive deep into the application looking for all possible pathways to follow and finding opportunities to test the rules it has been trained to identify.

Where the requirements engineer had previously specified a use case, now the requirements engineer has to specify domain knowledge, the “universal truths” as it were, of the application. The broader the rules are, the more the AI will apply them, and the narrower, the less frequently they will be used.

Each one of these rules is specified in two parts:

- How will the AI know the rule applies – that is the “see this” part, and

- What does the AI do if it does – the “do this”/”test this”/”enter this” part

Just a few hours of training will yield impressive amounts of application coverage.

In this way, the autonomously generated tests can be optimized, enabling near-complete application coverage to be tested in a few minutes.

AI-Generated Cognitive Tests

Once the AI has been trained, it can automatically generate regression tests based on real user activity for you too. By analyzing PII-free logs from environments (UAT and Production) where users have been exercising the application, the AI can create regression tests containing real user patterns. These user flows are invaluable because they represent how the user actually uses the application, not how the product team believes they do. Further analysis can even reveal which usage patterns and features are used most.

Single, Double, Triple Coverage

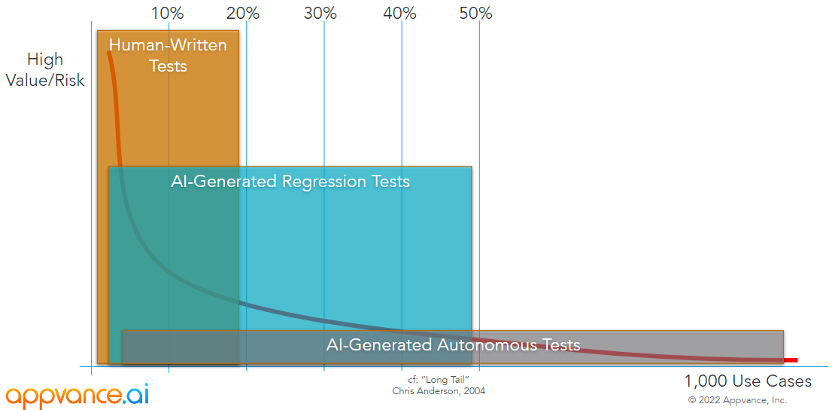

Using each of the AI-backed technologies in Appvance IQ, we are able provide Application Coverage in three ways.

- Triple Coverage where it matters the most (high-risk/high-value) – from the Human Written (ML-backed) tests that come from the defined use cases, the Autonomous (AI Blueprint) tests that come from the training the AI receives, and the Cognitive (AI-generated) tests guaranteeing coverage of real user activity

- Double Coverage in the areas most used by the users (high-traffic/high-function) – from the Autonomous (AI Blueprint) and AI-generated Cognitive tests

- Single Coverage in the areas of the application that perhaps would otherwise never be tested – from the Autonomous (AI Blueprint) tests

When the full spread of human written, AI Blueprint, and AI-generated tests are combined, test coverage is both comprehensive and dense. The result is triple coverage for the most critical code, double coverage for the important code, and single coverage for everything else.

Superpower your testing

What if you could clone yourself? How would you approach your quality assurance projects? Would you set about having as many copies of yourself pounding away at the keyboard so that every possible pathway is taken through the application?

You now have an army of testers as knowledgeable as you, you get to target them at the most critical parts of the application, and they will find bugs. That’s what autonomous testing is providing.

AI delivers:

- Faster human-written test authoring and design with ML-assisted accessor optimization

- Automatic human-written test resiliency with fallback accessors – adapting live during test execution

- Autonomously-healed Human-Written tests, greatly reducing the human effort for test maintenance

- Near 90% Application Coverage with domain-knowledge trained Autonomous Testing (AI Blueprinting)

- 100% Application Coverage of all actively used functionality with AI-Generated Cognitive testing

In other words, you become a superhuman valued for your domain expertise, not for how fast you can write scripts or execute test cases.

You can read Kevin’s prior post here or watch his recent webinar, A Strategic Approach to Delivering Software Quality: