The advances in AI and ML now make it possible to create expert systems that know both how applications are designed and how they behave. These systems can absorb the domain-specific instructions that enable them to replicate the behaviors of experienced QA testers with years of application-specific knowledge.

With the capacity to deploy artificial intelligence at scale, unheard-of application test coverage is now achievable and the ability to expose even the most elusive issues is finally possible. That’s the beauty of AI-driven autonomous testing.

Level-5 Autonomous Testing

For more than three decades, testing has remained the last phase of the software delivery lifecycle to be truly automated. Testing, and the writing of test scripts, is a manual effort with next to no support from technology that makes the testing work-product (e.g., the tests themselves as well as the test results) smarter.

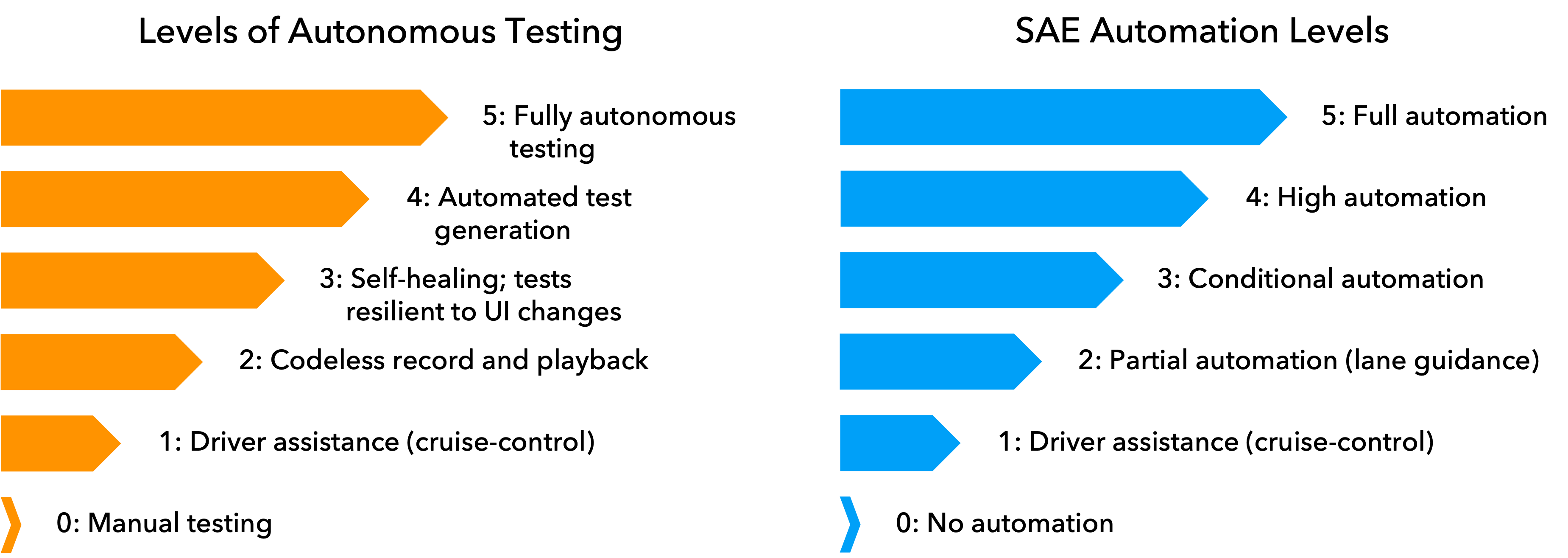

But the more recent application of AI/ML to the process of writing scripts as well as automating other portions of the testing process, like bug reporting, has opened up new possibilities for efficiency, as quantified in the Six Levels of Autonomous Testing, first coined in 2017.

The Levels of Autonomous Testing is a 6-point scale modeled after the Society of Automotive Engineers (now known as SAE International) model for self-driving levels.

These changes mean that our approach to automated software testing needs to be modernized to exploit the capabilities of the technology now available. We need to stop measuring the success of an automation project in terms of test scripts written but, instead, in the number of bugs found.

To support this shift in mindset, we’ve developed a Testing Manifesto for autonomous testing.

Autonomous Software Testing Manifesto

1. The only reason to test is to find issues

“If you don’t care about it, don’t waste your time testing it”

Any time we invest in developing automated tests should yield results we care about, that is: issues found or no issues found. When we test autonomously, the same rule applies—don’t spin up the AI and have it test everything; target the AI where its capabilities are going to yield the most valuable results.

2. Not all issues are created equal

“Some bugs will never be fixed; and that’s ok!”

We must accept that finding a bug does not mean that it will be fixed, ever. Our development and test resources are finite, and they should focus on those things that represent the highest risk and reward for the organization. But it is important that we find the bugs before our users do. Then the resolution is in our hands and we are never surprised.

3. Manual testing is the exception

“It might be expedient, but it’s not worth your time”

There will always be some things that have to be tested manually, but they should be rare and exceptional. Manual tests can be written as automated tests almost as quickly as it takes to do the manual test. Modern, level-2 recorders go at the speed of the manual tester and write the (majority) of the script for you. Even if it takes twice as long to automate the test as it does to do the test, by the time you have run the automation 3 times, you are better off.

If there is a physical device that must be inserted, you will likely need to test this manually.

4. Automated testing is the rule

“You’ll thank you later, and again, and again, and again”

Traditionally automated testing has failed because the need for script maintenance often overwhelms the testing team, and we get into the “it’s quicker to do it manually” debate. Level-3 recorders can build resiliency and healing into the script that simulates human behavior so that changes to the look and feel of the user interface do not cause the script to fail with what is essentially a false negative.

Indeed, the very best level-3 recorders can self-heal the script by rewriting the parts that have changed and updating the script to reflect the new and changed content of the user interface.

There is no excuse for not doing automated tests.

5. Autonomous testing is the difference

“Teach the AI to test, and you’ll test for a lifetime”

When you transfer your domain knowledge and experience to the AI you shift from being a tester to being a test designer. Your role goes from the tactical (did the build break anything?) to strategic (where do we need to go deeper, or gain broader coverage?).

An hour of training the AI on how to behave, validate, and enter the appropriate (or inappropriate) data will yield hundreds of unique, autonomously designed tests of your application. Ten hours of training will yield thousands. You can achieve a powerful level of productivity that is superhuman.

Moreover, level-5 AI can learn and adapt so, once trained, the system can test new functionality it discovers without further training; only occasionally will it ask for human assistance if it encounters something novel.

Like any engineering discipline, we need to use the right tool for the task at hand. Just because we have a hammer we must not see everything as a nail. With Appvance IQ comes the most powerful innovation in software test automation in more than three decades. For the first time, you get to task armies of AIs to dive deep and broad into the application and test, to your exacting demands, in every place it can reach. This means we should no longer look at a 1,000 use case testing project as daunting, but as an opportunity to determine which test strategy will be most effective in finding bugs because that’s really our job.

Kevin Parker first spoke about the Autonomous Software Testing Manifesto in his July 7 webinar hosted by TechWell. If you missed the webinar and you’re curious to know more, you can access the recording here.

This is the first of a two-part blog post. You can read Part 2 here.